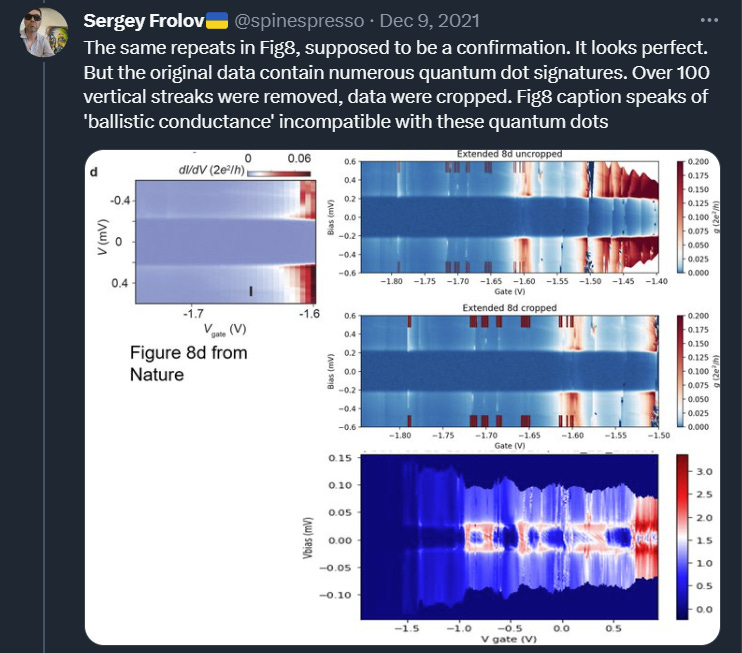

It’s been 2.5 years since Nature retracted “Quantized Majorana Conductance” by a Delft-Maryland team. Since then, my colleague Vincent Mourik and I have flagged three more papers from some of the same authors for retraction. Nature retracted one of these three 1.5 years ago, but the key authors of that second paper were fighting bitterly against retraction. Though the editors ultimately presented it as the authors’ decision. And the authors continue to fight against two other retractions we think should happen, at Nature Nanotechnology and Nature Communications. You can read our investigations here (or here) and here.

A Delft paper in Nature Communications remains without retraction, without an expression of concern, despite extensive data issues self-admitted by the key authors. Other authors were denied requests to be removed from the paper by the journal.

https://twitter.com/spinespresso/status/1468870460100251650

The authors are also resisting data sharing, in which they are aided by various functionaries at Delft University, Copenhagen University, national committees and journals themselves. By doing this they are effectively preventing, or at least disarming further investigations. The message cannot be clearer: stop digging.

Their close collaborators, old friends and various other insiders are also of the opinion that it is time to stop with retractions and investigations. We hear: “A lesson has been learned.” “Everyone got the point.” “Anyways, we know which papers are good and which ones not to trust.” “This is getting personal.” “Too much negative attention is bad for the field.” Each of these deserves a separate substack.

I should also mention that Delft retractions are not the only two related to Majorana. There has been a UCLA-Stanford paper retracted from Science, investigated by other people (I have written about it when it happened). Science also put a paper of a Copenhagen group under an editorial expression of concern after our investigation (see it here on Zenodo). This stops short of a retraction so far, but reveals the editors’ opinion of that paper. Just around the corner, in the field of superconductivity, a high-profile retraction from Nature hit a year ago, and another one just was made by PRL, both concerning claims out of Rochester.

So indeed, there have been quite a number of retracted and questioned papers as of late in condensed matter physics. Perhaps it is already too many? Or, on the contrary, should there be more?

It is difficult to answer this question precisely, because of all the secrecy surrounding the publication process. We cannot know how many people have approached editors or university officials with confidential concerns that are squashed, and how many did not even try to do anything out of fear, or because of discouragement.

At the same time, a large fraction of us, if not everyone, who has been around for a bit, have read a paper that was obviously wrong, and not in a good way - meaning not through reasonable scientific disagreement. I think many people would agree that there are papers out there that they think should be retracted or corrected or at least commented on. I certainly hear stories like this all the time, people come to me with them and we share experiences.

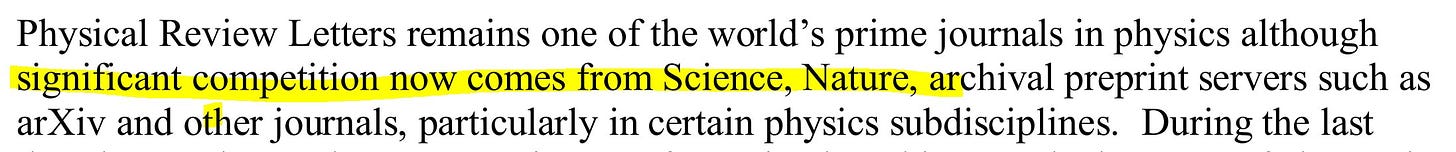

While I cannot provide you with rigorous statistics, I can do second best, and use a trick that physicists are very proud of - an order of magnitude estimate. Let’s take Physical Review Letters as a first example, a well-recognized physics journal that physicists think of as a good journal. The first input should be the total number of papers they publish which went from 5000/year to 2000/year. Over the past 20 years this will be 80,000 papers (order of magnitude!). 20 years is not an accidental date. First, this is how long ago the last batch of physics retractions related to Bell Labs took place. And second, this is roughly when the journal PRL began concerning itself with impact (more on that later and in future substacks).

Two screenshots from the PRL Evaluation Committee Report 2004 (with my highlighting)

By what process do articles end up published in Physical Review Letters? Well, that is a fairly straightforward peer-review where the editor mostly tallies up the referee votes. Reviews are often short and not nuanced, the overwhelming majority of reviewers do not see and do not ask for additional data or materials. So there is bound to be some rate of errors in the process where unreliable, incorrect, manipulated, fabricated claims make it into the journal. What should we assign this rate? To me 1% seems reasonable and likely an underestimate, based on studies in scientific reliability from other fields. That would make 800 retractable papers over 20 years.

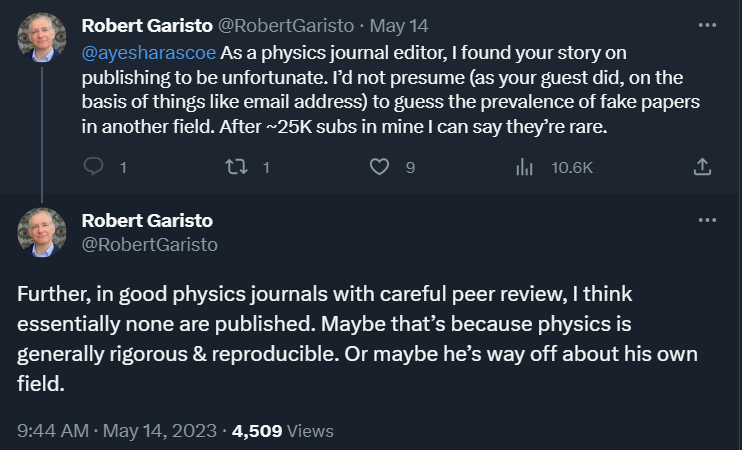

Maybe you would like to make an argument for physics exceptionalism - that physicists have a superhuman ability to detect BS. The chief editor of PRL is certainly of such opinion that his journal is just really good at selecting papers, checking for fraud and other issues prior to publication. Physicists can of course also be substantially more moral than other scientists or ordinary people. Perhaps they don’t commit fraud and don’t experience pressure to publish in high impact journals for which they would bend or alter their claims. Then you would choose an error rate of 0.1%, which is close to where the average rate or retractions across all of the literature, not specifically physics, seems to be. That is still around 100 papers over 20 years from PRL.

Let me guess: you think that you would certainly have heard of 1000 retractions, but 100 retractions is not that many over decades, just a few per year. Physics is big, and even if nothing happened in your field, there must have been something on other subjects. Right?..

Let’s now turn to the Retraction Watch Database, a rather complete catalog of all recent retractions. It lists just 16 retractions from Physical Review Letters since 2003. (It goes up to 16 with the newest one that was made.) So every year, PRL published several thousand papers, but retracted on average less than one! Perhaps retraction is just too harsh of a measure and instead the journal opts for corrections or comments? Those number in low tens, and there is evidence that PRL has been making it harder to submit and get comments published.

In my opinion, PRL retraction statistics are extreme. They are not evidence of how good the system works but of the opposite. Of how the journal suppresses quality control mechanisms by refusing to act on critical concerns, and through this undermines the scientific process. The retraction rate is between one and two orders of magnitude too low, and this sends a message that anything goes, so as long as you somehow make it through peer review you are all set and will face no consequences for any exaggerations, manipulations, fabrications or falsifications. And if you know of any, there is nothing you can do. Indeed, retractions like the one that just happened are of very high-profile works and they took enormous efforts to put through, made reluctantly under extreme community pressure and after initially dismissing concerns. Any smaller problem is viewed as not a big deal.

Perhaps the wrong papers are all the rejected papers, and peer review works so that there is no need for retractions? Except nothing prevents submission to any or all of about a dozen high impact journals in physics, of the same manuscript without any changes, until it finally gets in somewhere, at which point it is safe forever. On top of this, anyone who has actually been part of peer review, on either side of the process, would know that the degree of checking is often nominal, inconsistent at best. Without mechanisms of post-publication review, the process is missing a vital quality check.

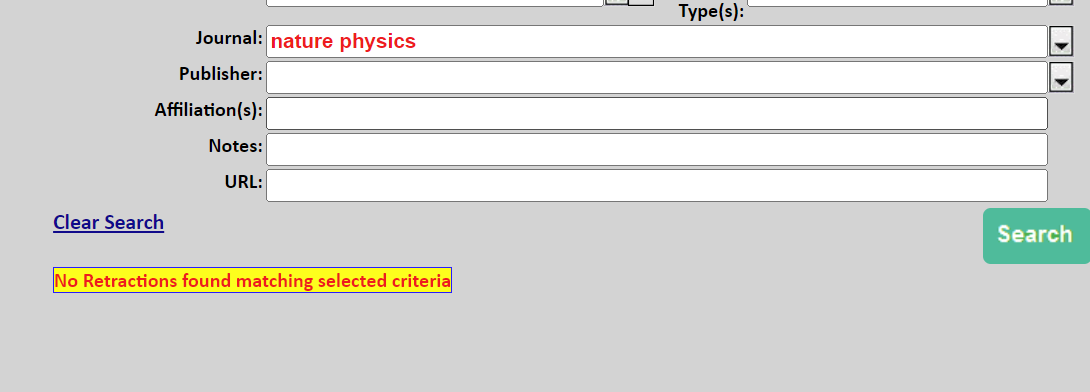

The culture of retraction is either token or non-existent. For example, Nature Physics is a journal that unseated Physical Review Letters in the impact publishing sector. It is a boutique journal, meaning it does not publish very many papers per year. But in its 18 years of existence, it has not retracted a single paper! Nature Nanotechnology has made a total of 1 retraction in its history. Nature Communications has made approximately 20, but similarly to PRL it publishes thousands! You can check my numbers using the Retraction Watch Database, or try to scroll the journal websites and find retractions there.

But what is a product without quality control? A journal without a single retraction is a product that is 100% unreliable. It has no demonstrated mechanism of checking for and correcting errors. And this means that any and every single paper in that journal can be wrong. So why do we need to consume a product like this? Imagine it were a car - would you say - we don’t know which part works and which doesn’t but we are still okay to drive?

The unreasonably low retraction rate itself makes it harder to retract unreliable papers that have been clearly identified. Think about it: what does it mean for an editor to never have retracted a paper in their whole career? You can imagine that there is a very high psychological bar to imposing such a drastic sanction. What justifies this ultimate step, akin to criminal sentencing? Yet the editors at these journals are not only so reluctant to cooperate with investigations, or to help peer researchers obtain data, they also come up with ridiculous arguments for why reproduction works should not be published - for instance because they are lower in their impact than the original, unreliable, claims.

And perhaps this is why, after a couple of retractions, they hold a meeting and decide to push back on further retractions. After all, the point has been made. Lessons learned. Time to move on. Otherwise there will be damage to the field. Or, more importantly, to the journals. Yet, by resisting retractions, the high impact journals only hasten their demise. They exhibit an inability to adapt and embrace the evolving needs of researchers, such as of a first year graduate student who wants to know which paper they can trust. Or of an assistant professor whose field is poisoned by unrealistic claims from bigger groups. Or a postdoc trying to build up on a study that they read. Or of a general public member who read a sensation piece in a newspaper picked up from a glossy science journal.